News at the HPC²

CAVS' Doude and Carruth Write for the 'Conversation' about Autonomous Research at MSU

November 8, 2018

Halo Project Car Render

Many paved roads, though, have faded paint, signs obscured behind trees and unusual intersections. In addition, 1.4 million miles of U.S. roads – one-third of the country’s public roadways – are unpaved, with no on-road signals like lane markings or stop-here lines. That doesn’t include miles of private roads, unpaved driveways or off-road trails.

What’s a rule-following autonomous car to do when the rules are unclear or nonexistent? And what are its passengers to do when they discover their vehicle can’t get them where they’re going?

Accounting for the obscure

Most challenges in developing advanced technologies involve handling infrequent or uncommon situations, or events that require performance beyond a system’s normal capabilities. That’s definitely true for autonomous vehicles. Some on-road examples might be navigating construction zones, encountering a horse and buggy, or seeing graffiti that looks like a stop sign. Off-road, the possibilities include the full variety of the natural world, such as trees down over the road, flooding and large puddles – or even animals blocking the way.

At Mississippi State University’s Center for Advanced Vehicular Systems, we have taken up the challenge of training algorithms to respond to circumstances that almost never happen, are difficult to predict and are complex to create. We seek to put autonomous cars in the hardest possible scenario: driving in an area the car has no prior knowledge of, with no reliable infrastructure like road paint and traffic signs, and in an unknown environment where it’s just as likely to see a cactus as a polar bear.

Our work combines virtual technology and the real world. We create advanced simulations of lifelike outdoor scenes, which we use to train artificial intelligence algorithms to take a camera feed and classify what it sees, labeling trees, sky, open paths and potential obstacles. Then we transfer those algorithms to a purpose-built all-wheel-drive test vehicle and send it out on our dedicated off-road test track, where we can see how our algorithms work and collect more data to feed into our simulations.

Starting virtual

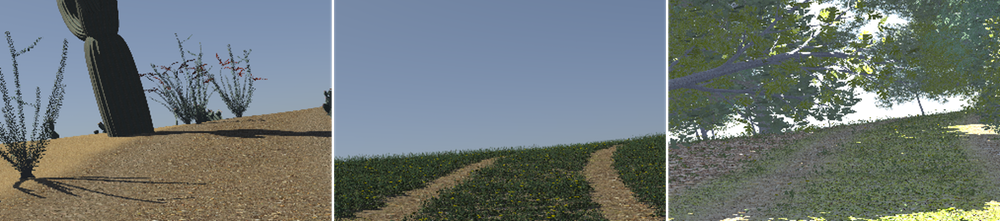

Simulated desert, meadow and forest environments generated by the Mississippi State University Autonomous Vehicle Simulator.

Photo by Chris Goodin, Mississippi State University

Photo by Chris Goodin, Mississippi State University

The system also simulates the readings of sensors commonly used in autonomous vehicles, such as lidar and cameras. Those virtual sensors collect data that feeds into neural networks as valuable training data.

Building a test track

A road washout, as seen in real life, left, and in the simulation. Photo by Chris Goodin, Mississippi State University

We have selected certain natural features of this land that we expect will be particularly challenging for self-driving vehicles, and replicated them exactly in our simulator.

That allows us to directly compare results from the simulation and real-life attempts to navigate the actual land. Eventually, we’ll create similar real and virtual pairings of other types of landscapes to improve our vehicle’s capabilities.

Collecting more data

.jpg)

The Halo Project car can collect data about driving and navigating in rugged terrain.

Photo by Beth Newman Wynn, Mississippi State University

Photo by Beth Newman Wynn, Mississippi State University

The Halo Project car has additional sensors to collect detailed data about its actual surroundings, which can help us build virtual environments to run new tests in.

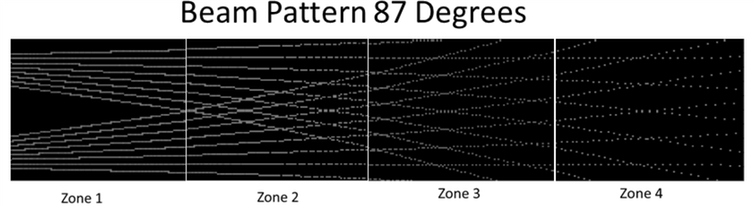

Two of its lidar sensors, for example, are mounted at intersecting angles on the front of the car so their beams sweep across the approaching ground. Together, they can provide information on how rough or smooth the surface is, as well as capturing readings from grass and other plants and items on the ground.

We’ve seen some exciting early results from our research. For example, we have shown promising preliminary results that machine learning algorithms trained on simulated environments can be useful in the real world.

Lidar beams intersect, scanning the ground in front of the vehicle.

Photo by Chris Goodin, Mississippi State University

Photo by Chris Goodin, Mississippi State University

As with most autonomous vehicle research, there is still a long way to go, but our hope is that the technologies we’re developing for extreme cases will also help make autonomous vehicles more functional on today’s roads.

Written by Daniel Carruth and Matthew Doude

Published in The Conversation